Integrating AI Providers for Assisted Remediation¶

BoostSecurity's AI integration enhances your security workflow by providing AI-assisted remediation for vulnerabilities directly within your pull requests. By connecting an AI provider, you can leverage powerful language models to generate code suggestions and comments, helping your developers fix security issues faster and more efficiently.

This guide will walk you through the process of connecting and configuring an AI provider, such as OpenAI, Bedrock, Gemini, or Anthropic, within the BoostSecurity platform.

Key Benefits¶

- Automated Code Suggestions: Receive AI-generated code snippets to fix detected vulnerabilities.

- Seamless SCM Integration: View suggestions and comments directly in your existing pull requests across GitHub, GitLab, Azure DevOps (ADO), and Bitbucket.

- Flexible Provider Support: Choose from a range of leading AI providers and models to fit your organization’s needs.

- Improved Developer Experience: Empower developers to resolve security findings without leaving their workflow.

- Context-Aware Fixes: AI consumes repository guidelines (e.g.,

cloud.md,gemini.md) to enforce project-specific conventions. - Safer Remediation Workflows: Secrets are masked before any code is sent to the AI provider.

Prerequisites¶

Before you begin, ensure you have the following:

- AI Provider Account: An active account with an AI provider (e.g., OpenAI, Bedrock, Google AI, Anthropic) and a valid API key.

- Compatible AI Model: The selected AI model must support text-based analysis and generation for code review. Models designed for chat, code, or text are recommended (e.g.,

gpt-4,claude-sonnet,gemini-pro). Using models specialized in image or audio processing may result in errors or empty results.

Integration Steps¶

Follow these steps to configure the AI integration:

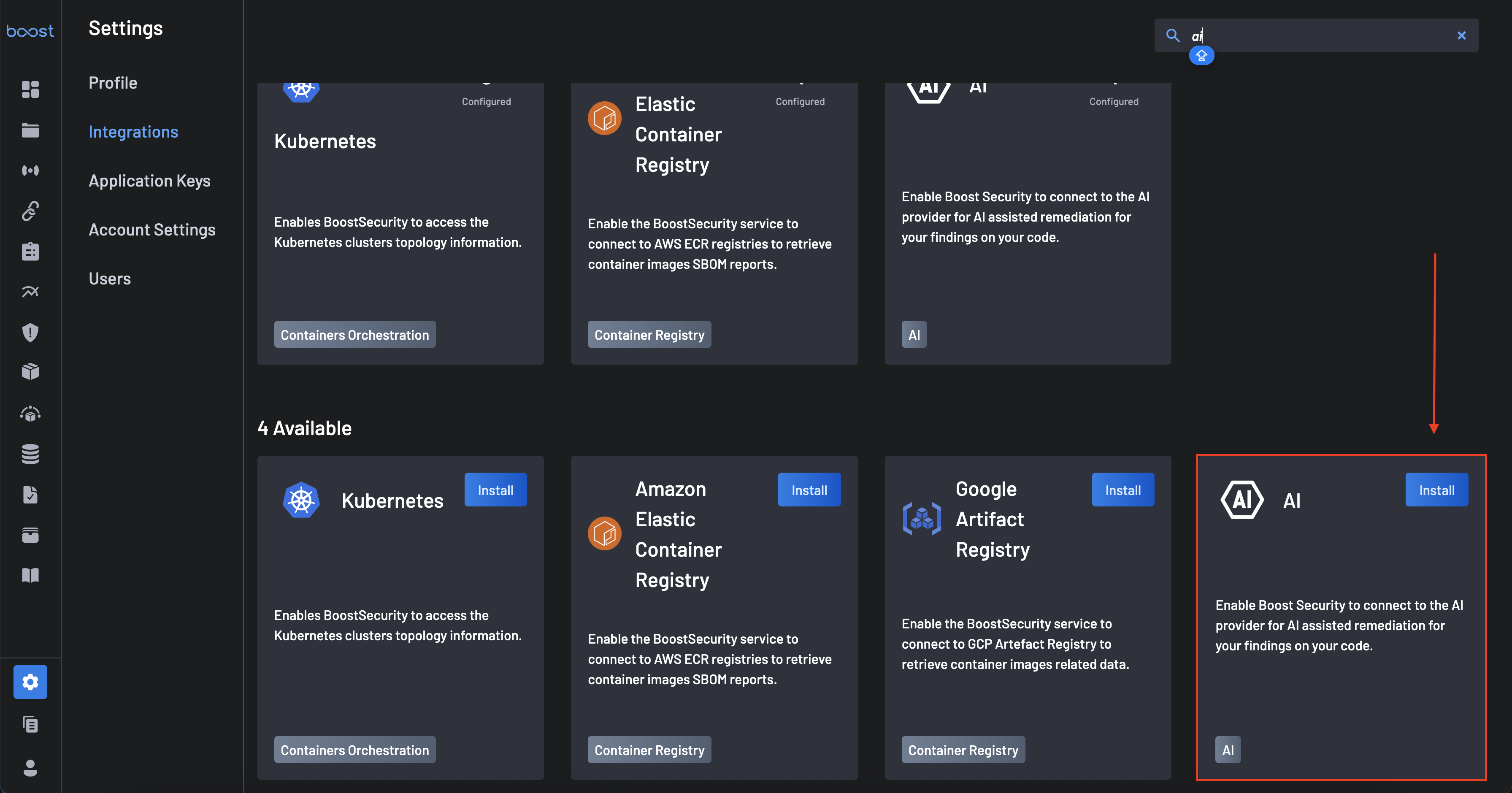

- Navigate to the Integrations page on your BoostSecurity dashboard.

-

Under the "Available" section, locate the AI integration card and select it.

-

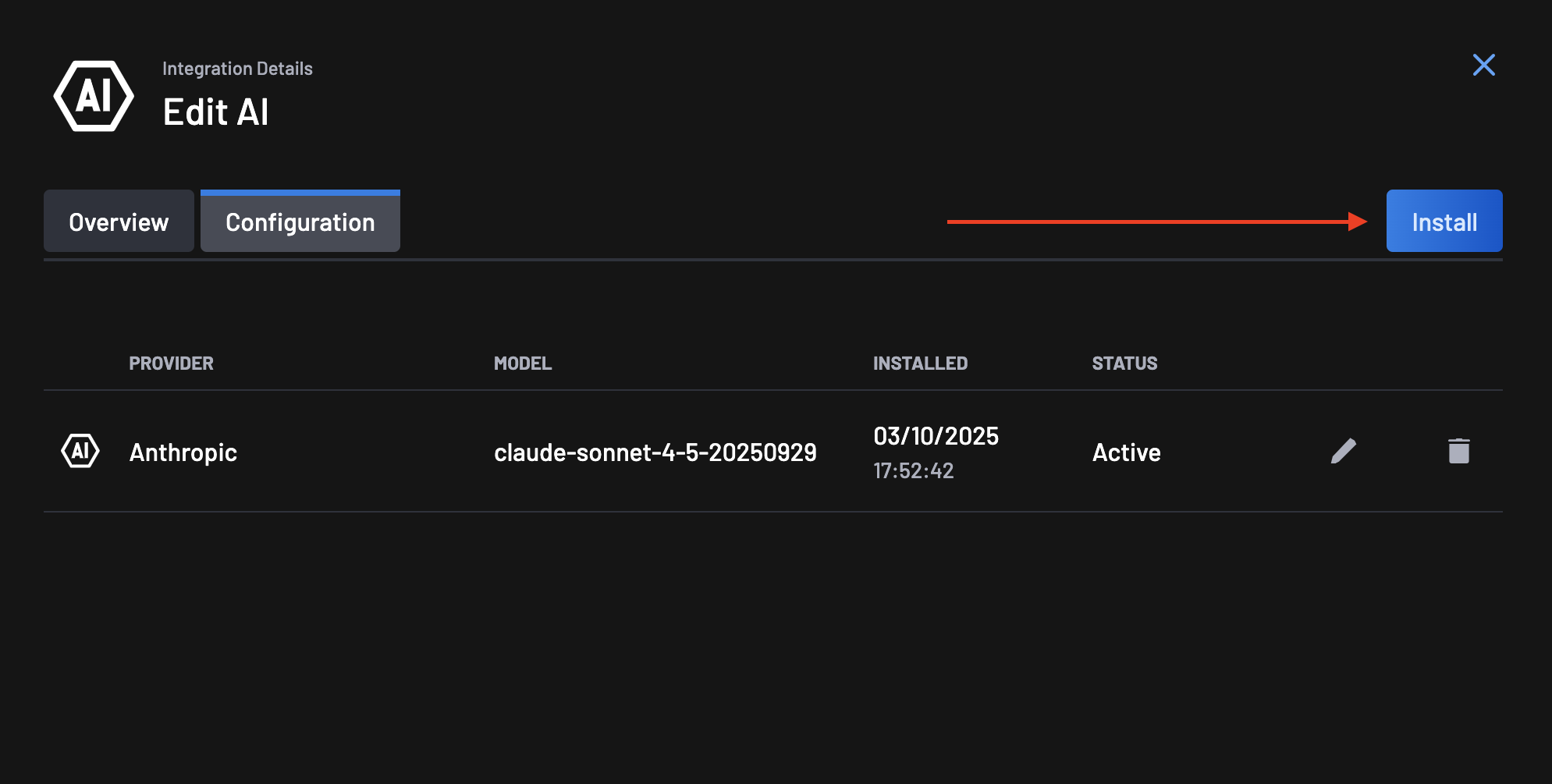

Click the "Install" button in the top right to add an AI provider.

-

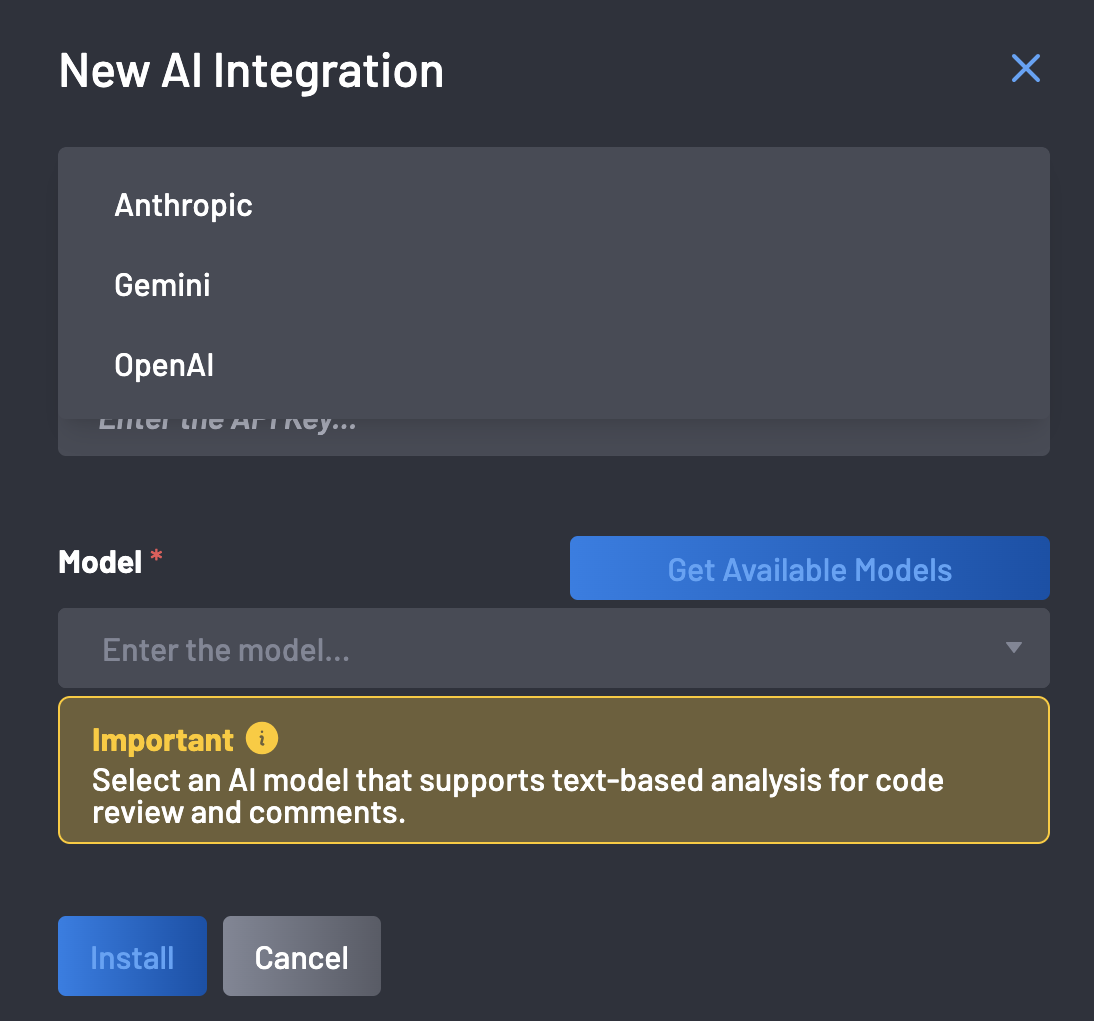

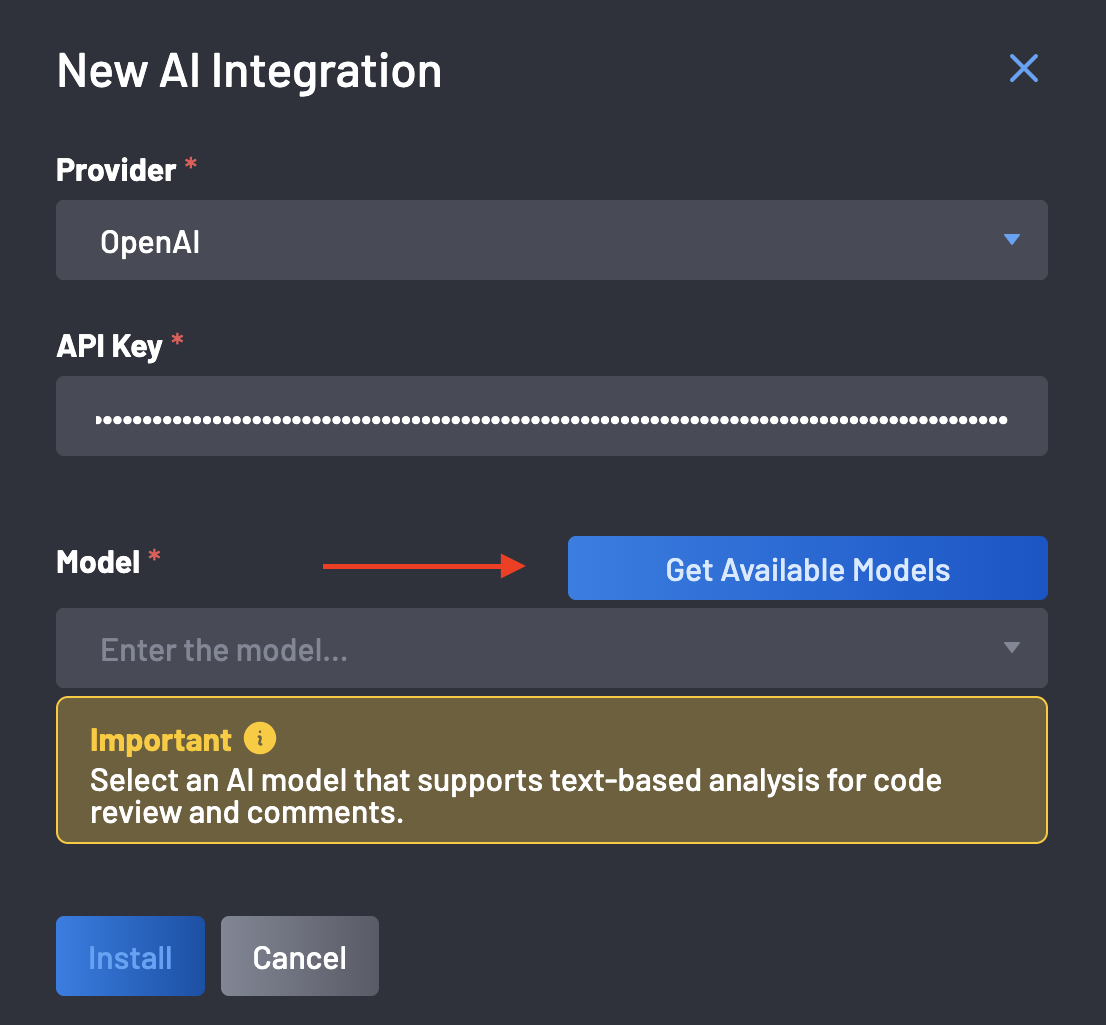

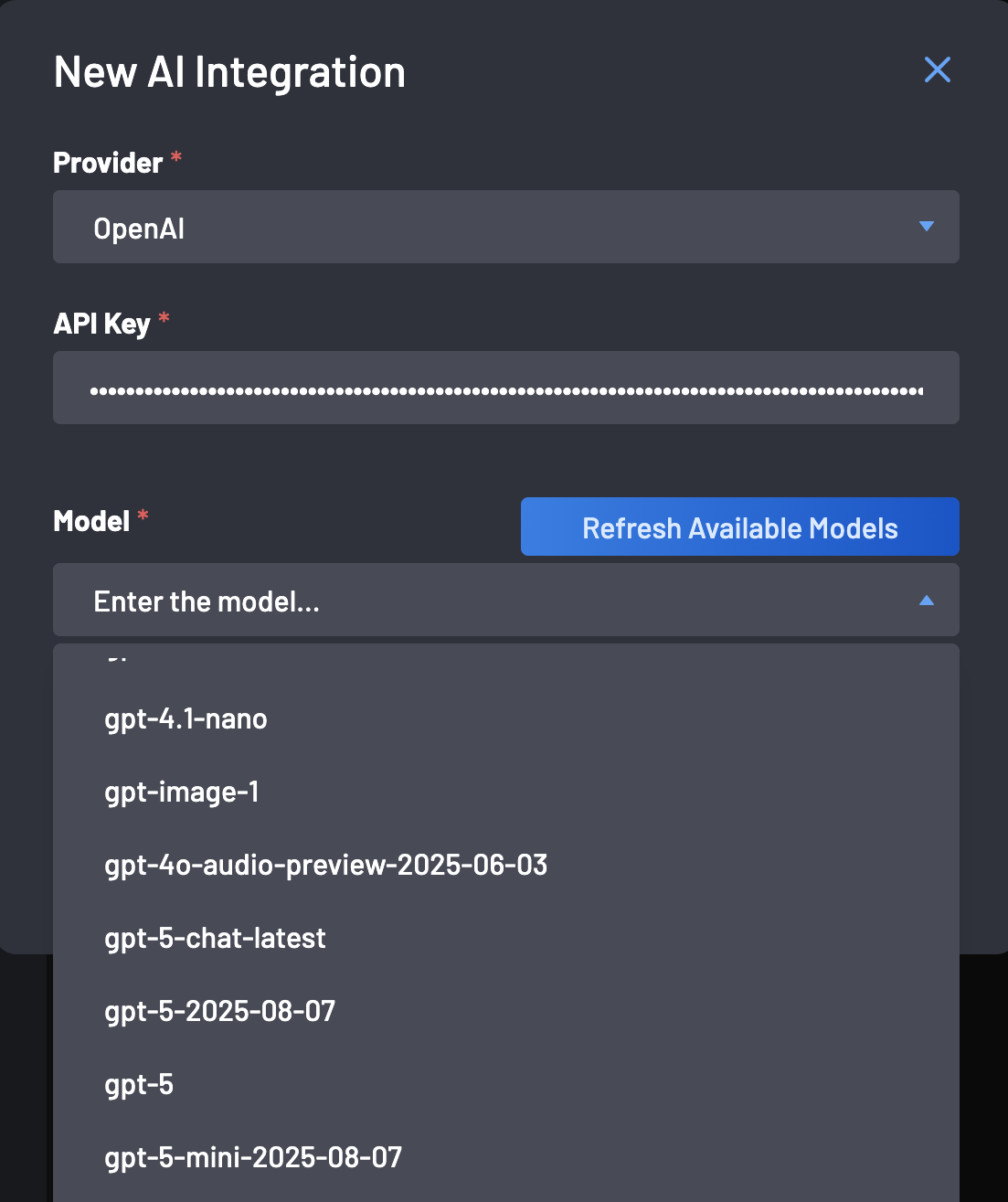

Select a Provider: From the Provider dropdown menu, choose the AI provider you wish to connect (e.g., OpenAI, Bedrock, Gemini, Anthropic).

-

Paste your API key from the selected provider into the API Key field and click the Get Available Models button. BoostSecurity will use the provided API key to query the provider and populate the list of available models.

Important

When enabling AI in your application, make sure to choose a model designed for text understanding and generation. Models specialized in image, audio, or speech processing cannot perform code review or generate text comments. We recommend selecting a chat or code-oriented model (for example, models labeled "chat", "code", or "text"). Using an unsupported model may cause the AI review to fail or return empty results.

-

Select a Model: From the Model dropdown menu, select the specific AI model you want to use for generating code suggestions.

-

Install the Integration: Once you have filled in all the required fields, click the "Install" button to save the configuration.

Your AI provider is now connected. BoostSecurity will begin using this integration to provide automated remediation suggestions in your SCM tools.

Important

You can only install a single provider per account for generating suggestions.

Additional AI Features¶

Secrets Filtering (GitLeaks-Based)¶

BoostSecurity automatically applies local GitLeaks scanning to detect and mask secrets before sending any code to the AI provider.

- Sensitive values are replaced with

******. - Ensures credentials never leave your environment.

- Reduces the risk of secret leakage to third-party LLMs.

Repository Context Consumption¶

AI models now read project-specific guideline files, such as:

cloud.mdgemini.md- other root-level architectural or coding guideline documents

This allows BoostSecurity to produce context-aware fixes, such as:

- enforcing SQLAlchemy over raw SQL,

- applying organization-specific secure patterns,

- following internal engineering conventions.

AI-Powered False Positive Detection¶

AI can identify findings that appear to be false positives based on code context and recommend:

- marking the finding as "no boost" (suppression),

- adding justification to the PR.

This reduces noise and improves developer trust in the scanning pipeline.

Managing Existing Integrations¶

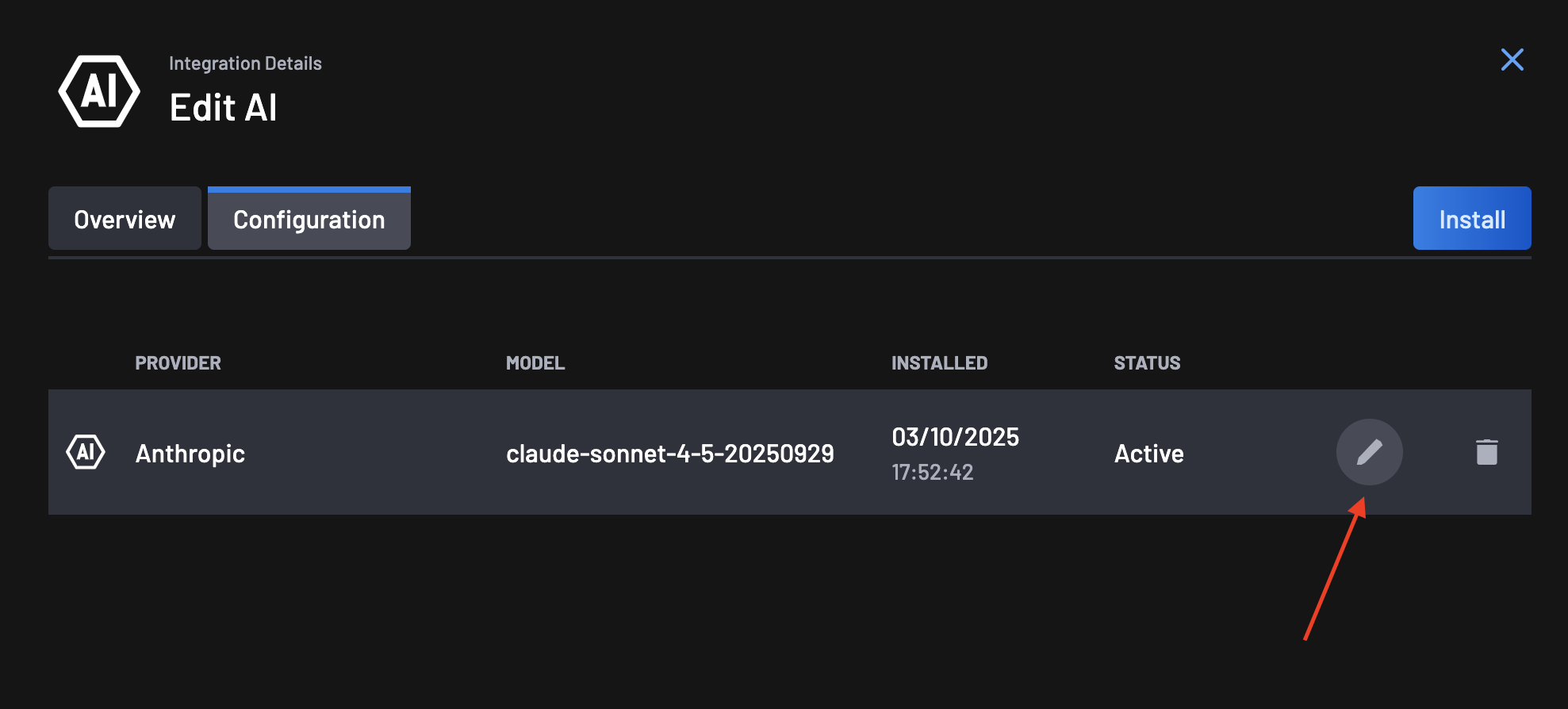

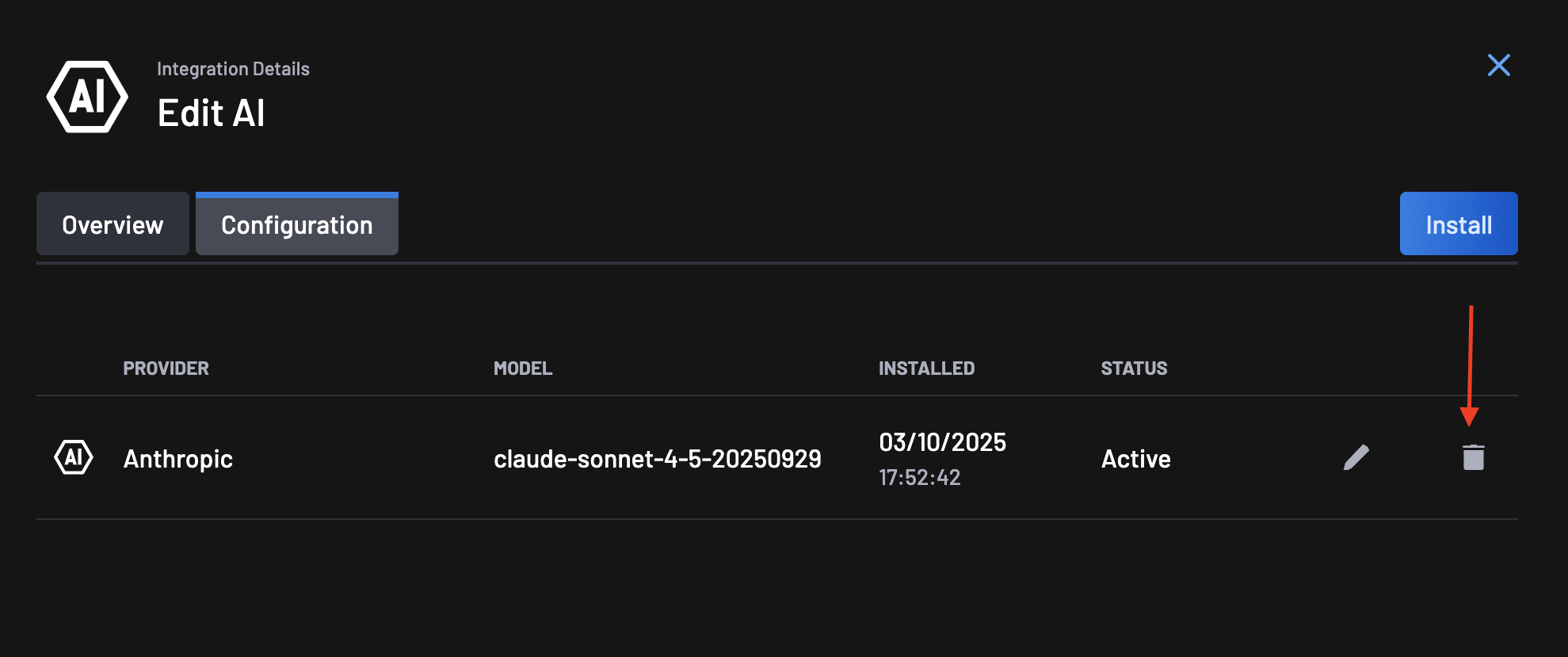

You can manage your configured AI providers at any time from the Integrations > AI page.

-

Edit: To change the selected model or update the API key, click the pencil icon next to the provider.

-

Delete: To remove an integration, click the trash icon.

Troubleshooting¶

| Error / Issue | Possible Cause | Solution |

|---|---|---|

| "Get Available Models" Fails or Returns Empty | Invalid API key or missing permissions. | Verify the key, regenerate if needed, and update your configuration. |

| AI Suggestions Not Appearing in Pull Requests | Unsupported model or SCM misconfiguration. | Ensure a code-capable model is selected, verify SCM integration, and check scan logs. |

| Poor Quality or Irrelevant Suggestions | Model too weak or lacking context. | Try a more advanced model and ensure guideline files (e.g., cloud.md) are present for context consumption. |

| Secrets appear in suggestion previews | Repository contains patterns not recognized as secrets by default. | Confirm masking is enabled, and update your repo’s secret patterns if needed. |

Frequently Asked Questions (FAQ)¶

Q1: Can I connect multiple AI providers at the same time?

No, you can only install a single provider per account for generating suggestions.

Q2: How is my API key stored?

API keys are encrypted and stored securely following industry best practices.

Q3: Which SCM platforms support AI-assisted remediation?

BoostSecurity provides support for AI assisted remediation in PR for GitHub, GitLab, Bitbucket, and ADO.

Q4: Does AI consider my repository’s coding guidelines?

Yes. If guidelines like

cloud.mdorgemini.mdare present, the AI uses them to produce style and policy-compliant fixes.

Q5: Does Boost use its own AI model?

No. Boost does not use, run, or operate its own AI model for the purposes of this integration. Boost connects only to the AI provider you have configured and are already approved to use. Your code is sent to that provider and is never analyzed, stored, or processed in Boost’s cloud or by any Boost-owned AI or LLM.