Amazon Bedrock – Model Constraints and Limitations¶

When using Amazon Bedrock as your AI provider, there are important constraints related to model availability, regions, and invocation methods that may affect which models you can use for AI-assisted remediation.

These constraints are enforced by AWS and apply to all Bedrock consumers.

Inference Profiles vs On-Demand Throughput¶

Some newer Anthropic models available through Bedrock (for example, recent Claude Sonnet versions) do not support on-demand throughput.

Instead, these models require invocation through an inference profile.

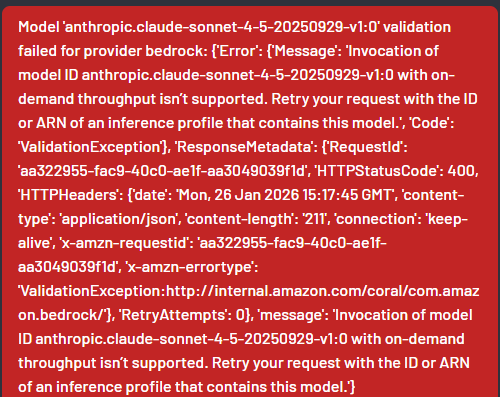

If you attempt to use one of these models without an inference profile, Bedrock will return a validation error similar to:

Error

Invocation of model ID <model-id> with on-demand throughput isn’t supported. Retry your request with the ID or ARN of an inference profile that contains this model.

This error occurs during model validation or runtime invocation and prevents the model from being used for remediation generation.

Region Availability Requirements¶

Inference profiles are not restricted to a single AWS region.

Each inference profile is associated with multiple AWS regions, and Bedrock requires that:

- Your AWS credentials have permission to invoke the model in all regions supported by the selected inference profile

If your credentials are restricted to a single region, Bedrock will reject requests for models that require inference profiles, even if the model appears available.

This behavior is specific to newer Anthropic models and does not apply to older models that still support single-region, on-demand invocation.

Impact on BoostSecurity AI Integration¶

Because BoostSecurity:

- Uses the Bedrock API exactly as AWS defines it, and

- Does not manage inference profiles or regional permissions on your behalf,

the following requirements must be met for Bedrock-based AI remediation to work correctly:

-

The selected Bedrock model must either:

- support on-demand throughput or

- be accessible via an inference profile

-

Your AWS credentials must allow access to all regions associated with the inference profile

- The model must support text generation and code analysis

If these conditions are not met, model validation will fail and AI remediation will not run.

Recommended Configuration for Bedrock Users¶

To avoid integration issues:

-

Verify model support

- Confirm whether the selected model supports on-demand throughput or requires an inference profile

- Refer to the official AWS Bedrock model list for region and invocation details

-

Review region permissions

- Ensure your AWS credentials are not restricted to a single region

- Grant access to all regions required by the inference profile

-

Prefer stable, text-capable models

- Choose models explicitly designed for text or code generation

- Avoid image-, audio-, or speech-only models

Troubleshooting Bedrock-Specific Errors¶

| Error Message | Likely Cause | Resolution |

|---|---|---|

| On-demand throughput isn’t supported | Model requires an inference profile | Use an inference profile–enabled model and credentials |

| ValidationException (HTTP 400) | Insufficient region access | Expand AWS region permissions |

| Model appears but cannot be invoked | Model not available in selected region | Verify model region compatibility |

| AI remediation does not run | Unsupported model configuration | Select a compatible Bedrock model |

Additional References¶

For the most up-to-date information on Bedrock model support and regional availability, refer to the official AWS documentation: